Multi-modal/Multi-sensor Monitoring and Decision Support

Processing the signals from different sensors reveals some properties about objects located and/or events happening in their vicinity. However, sensors typically exert no effect on each other, which is a suboptimal mode of operation. Each sensor stands on its own, delivering information without taking into account feedback from other neighbour sensors. Additionally, optimizing the reliability of information retrieved from single sensors has led to intensive research in the past few years, yet has reached its limits. Therefore, to further improve sensor systems, collaborative gathering and processing of sensor data become necessary. By designing multi-modal and multi-sensor architectures we provide collaboration among sensors (classical, video-based, time-series, mobile sensing and virtual/data mining sensors) in order to feed back the available information and intelligence of all sensors to optimize their functionality and enhance the detection and interpretation of advanced events.

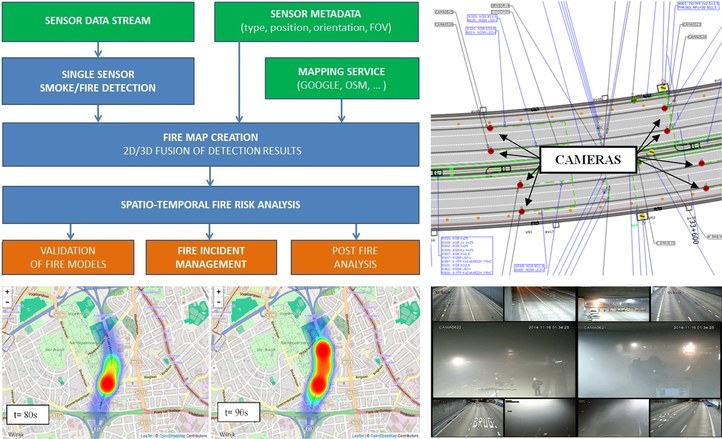

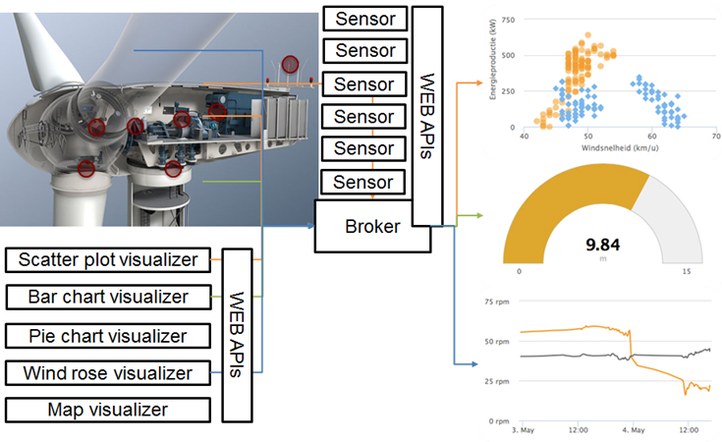

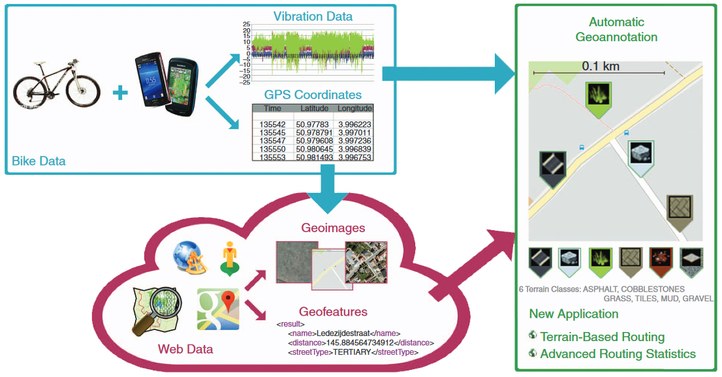

A wide range of applications can benefit from these multi-modal and multi-sensor architectures that fuse amongst other visual, audio, thermal, vibration and/or data mining information. Examples are office or airport security and human tracking, traffic control systems, advanced smoke and fire detection, advanced health care delivery and assistance to elderly, industrial process control or condition monitoring, automatic geo-annotation of road/terrain types, and multimedia search optimization.

The heterogeneity and vast amount of sensors, as well as the difficulty of creating interesting sensor data combinations, also hinder the deployment of fixed structure dashboards as they are unable to cope with the accordingly vast amount of required mappings. Therefore, we additionally develop dynamic dashboards that precisely visualize the interesting data for the end-user produced by sensors in multi-sensor environments by dynamically generating meaningful service compositions, allowing the detection of complex events that used to remain undetected.

Subtopics/Keywords: Video Fire Analysis, Condition Monitoring, Visualization dashboards, Deep learning, Feature Engineering

Staff

Sofie Van Hoecke, Steven Verstockt, Erik Mannens, Femke Ongenae, Femke De Backere, Azarakhsh Jalavand.

Researchers

Pieter Bonte, Dieter De Witte, Alexander Dejonghe, Olivier Janssens, Viktor Slavkovikj*, Florian Vandecasteele.

Projects

- BOF-GOA UGENT, PRETREF: Prediction of Turbulent Reactive Flows by means of Numerical Simulations

- VLAIO TETRA, PROMOW: Smart Product For Mobile WellBeing

- IWT VIS O&M Excellence: Offshore Wind Operations & Maintenance Excellence

- IWT SBO HYMOP: Hypermodelling strategies on multi-stream time-series data for operational optimization

Key publications

- Janssens, Olivier, Viktor Slavkovikj, Bram Vervisch, Kurt Stockman, Mia Loccufier, Steven Verstockt, Rik Van de Walle, and Sofie Van Hoecke. 2016. “Convolutional Neural Network Based Fault Detection for Rotating Machinery.” Journal of Sound and Vibration 377: 331–345.

- Slavkovikj, Viktor, Steven Verstockt, Wesley De Neve, Sofie Van Hoecke, and Rik Van de Walle. 2016. “Unsupervised Spectral Sub-feature Learning for Hyperspectral Image Classification.” International Journal of Remote Sensing 37 (2): 309–326.

- Van Hoecke, Sofie, Ruben Verborgh, Davy Van Deursen, and Rik Van de Walle. 2014. “SAMuS: Service-oriented Architecture for Multisensor Surveillance in Smart Homes.” Scientific World Journal.

- Verstockt, Steven, Sofie Van Hoecke, Pieterjan De Potter, Peter Lambert, Charles Hollemeersch, Bart Sette, Bart Merci, and Rik Van de Walle. 2014. “Multi-modal Time-of-flight Based Fire Detection.” Multimedia Tools and Applications 69 (2): 313–338.

- Slavkovikj, Viktor. 2016. “Multimodal Analysis for Object Classification and Event Detection”. Phd thesis. Ghent University. Faculty of Engineering and Architecture.