Speech and Audio Processing

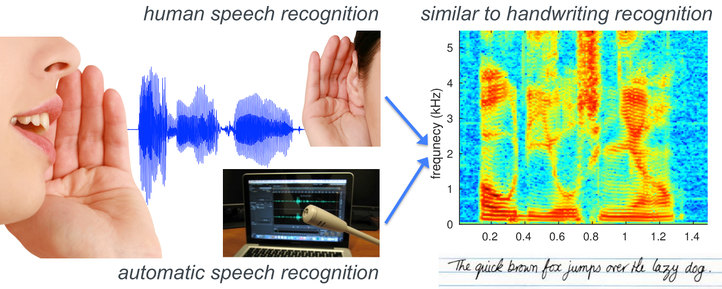

Spoken language is a prevalent way to exchange information between humans. Allowing machines to act upon this information or to interact with humans in the most natural way thus requires that machines can deduce the meaning of what is being said.

IDLab has expertise on most if not all aspects of speech and audio processing. We currently focus on the following challenges:

- Speech and audio acquisition in noisy environments.

For accurate interpretation, the acquired signal (speech/audio) must be of high-quality. Signals acquired “in the field”, however, are often corrupted by interfering signals that can be acoustic or electrical in nature. Signal corruption occurs due to several factors such as sensor degradation, presence of audio sources other than the source of interest, reverberation and echoes, electrical interference, loss of fidelity during the process of audio coding, transmission and storage, etc. Such degradations of the captured signal can severely affect the performance of the (back-end) system analysing the data.

Our research focusses on approaches to enhance the target signal by means of microphone arrays and adaptive beamforming techniques. We also have expertise in single-channel noise suppression and the post-processing of beamformed signals for further suppression of background interference. Within the field of microphone arrays, we conduct research into beamforming using fixed and/or ad hoc distributed microphones. The topic of post-processing and single-channel enhancement encompasses statistical approaches (signal-agnostic or model-driven) for audio enhancement. We are also interested in leveraging deep learning for these purposes and a synergetic combination of deep learning and statistical approaches is a key research direction for us. - Speech recognition, i.e. transcribe at verbatim what is being said.

Although there has been a steady improvement in the accuracy of speech recognizers, there is still a leap of more than an order of magnitude needed to attain human performance, especially so in the presence of noise, reverberation, and dialectal speech, ... To close this gap, IDLab investigates new dedicated machine learning approaches, new ways of combining the two main information sources (acoustics and linguistics), and various signal processing techniques. Inspiration is frequently found in theories of human speech recognition. - Extracting non-verbal information from the audio such as speaker ID (who is speaking), expressed emotion, state of mind, and stress levels in the speech.

Such paralinguistic information is relevant on itself, e.g. to assess the quality of the customer care service in a company, or it may play an indirect role in grasping the full meaning of what is being said. - Speech assessment.

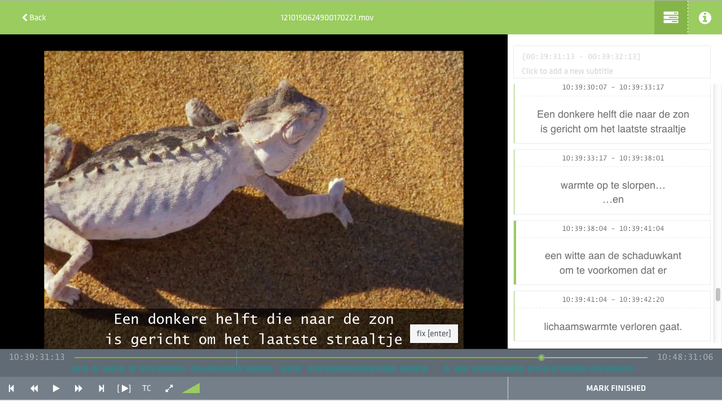

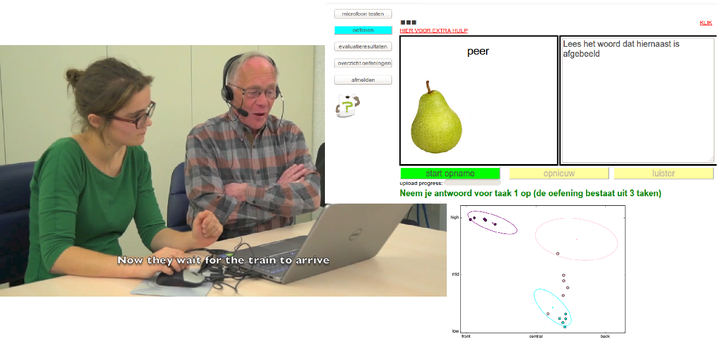

In domains such as (second) language learning, evaluation of the oral skills of “professional speakers” (e.g. interpreters), and evidence-based speech therapy, it is essential that one can assess the various aspect of speech (such as intelligibility, articulation, or phonation) in an automatic way.

A central point of attention in all these sub-domains is robustness, i.e. find techniques that do not only perform well in select benchmark tests, but also work well in real applications.

Staff

Kris Demuynck, Nilesh Madhu, Jean-Pierre Martens.

Researchers

Geoffroy Vanderreydt, Francois Remy, Jenthe Thienpondt, Alexander Bohlender, Yanjue Song, Stijn Kindt, Siyuan Song, Pratima Upretee, Jasper Maes

Key publications

- Exemplar-based processing for speech recognition

Tara N Sinath, Bhuvana Ramabhadran, David Nahamoo, Dimitri Kanevsky, Dirk Van Compernolle, Kris Demuynck UGent, Jort F Gemmeke, Jerome R Bellegarda and Shiva Sundaram (2012) IEEE SIGNAL PROCESSING MAGAZINE. 29(6). p.98-113- Acoustic modeling with hierarchical reservoirs

Fabian Triefenbach UGent, Azarakhsh Jalalvand UGent, Kris Demuynck UGent and Jean-Pierre Martens UGent (2013) IEEE TRANSACTIONS ON AUDIO, SPEECH AND LANGUAGE PROCESSING. 21(11). p.2439-245- An improved two-stage mixed language model approach for handling out-of-vocabulary words in large vocabulary continuous speech recognition

Bert Réveil UGent, Kris Demuynck UGent and Jean-Pierre Martens UGent (2014) COMPUTER SPEECH AND LANGUAGE. 28(1). p.141-162- Robust automatic intelligibility assessment techniques evaluated on speakers treated for head and neck cancer

Catherine Middag UGent, Renee Clapham, Rob van Son and Jean-Pierre Martens UGent (2014) COMPUTER SPEECH AND LANGUAGE. 28(2). p.467-482- Factor analysis for speaker segmentation and improved speaker diarization

Brecht Desplanques UGent, Kris Demuynck UGent and Jean-Pierre Martens UGent (2015) Interspeech. p.3081-3085- Porting concepts from DNNs back to GMMs

Kris Demuynck UGent and Fabian Triefenbach UGent (2013) 2013 IEEE Workshop on automatic speech recognition and understanding (ASRU). p.356-361- Instantaneous a priori SNR estimation by cepstral excitation manipulation

Samy Elshamy, Nilesh Madhu UGent, Wouter Tirry and Tim Fingscheidt(2017) IEEE-ACM TRANSACTIONS ON AUDIO SPEECH AND LANGUAGE PROCESSING. 25(8). p.1592-1605- Consistent iterative hard thresholding for signal declipping

Srdan Kitic, Laurent Jacques, Nilesh Madhu UGent, Michael Peter Hopwood, Ann Spriet and Christophe De Vleeschouwer(2013) 2013 IEEE INTERNATIONAL CONFERENCE ON ACOUSTICS, SPEECH AND SIGNAL PROCESSING (ICASSP) . In International Conference on Acoustics Speech and Signal Processing ICASSP p.5939-5943- A Versatile framework for speaker separation using a model-based speaker localization approach

Nilesh Madhu UGent and Rainer Martin(2011) IEEE TRANSACTIONS ON AUDIO SPEECH AND LANGUAGE PROCESSING. 19(7). p.1900-1912- Acoustic Source Localization with Microphone Arrays

Nilesh Madhu and Rainer Martin (2008). Advances in Digital Speech Transmission (R. Martin, U. Heute & C. Antweiler (eds)). p.135 - 170. doi:10.1002/9780470727188.ch6.